Reinforcement Learning: Q-Learning, SARSA, Value Iteration

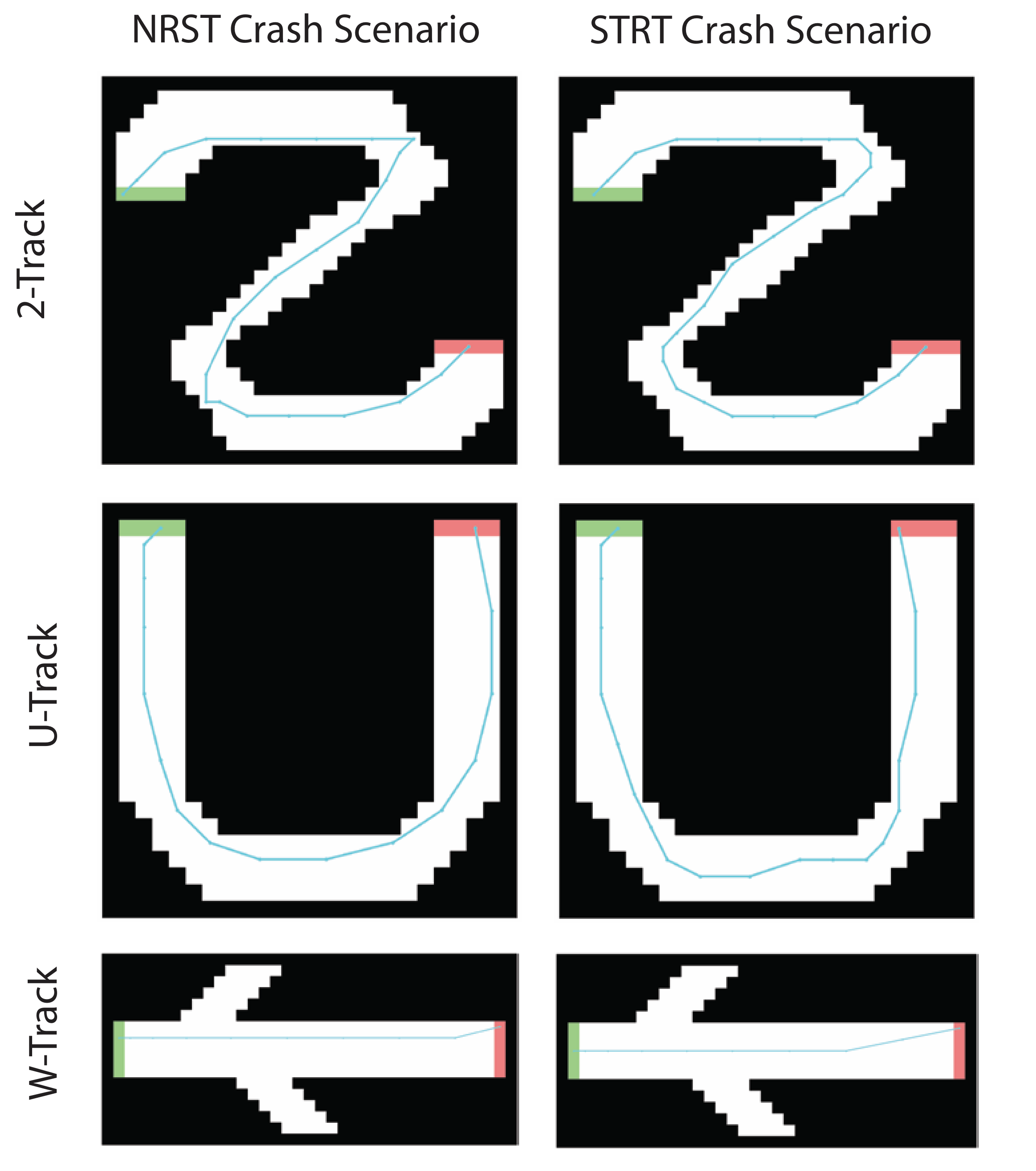

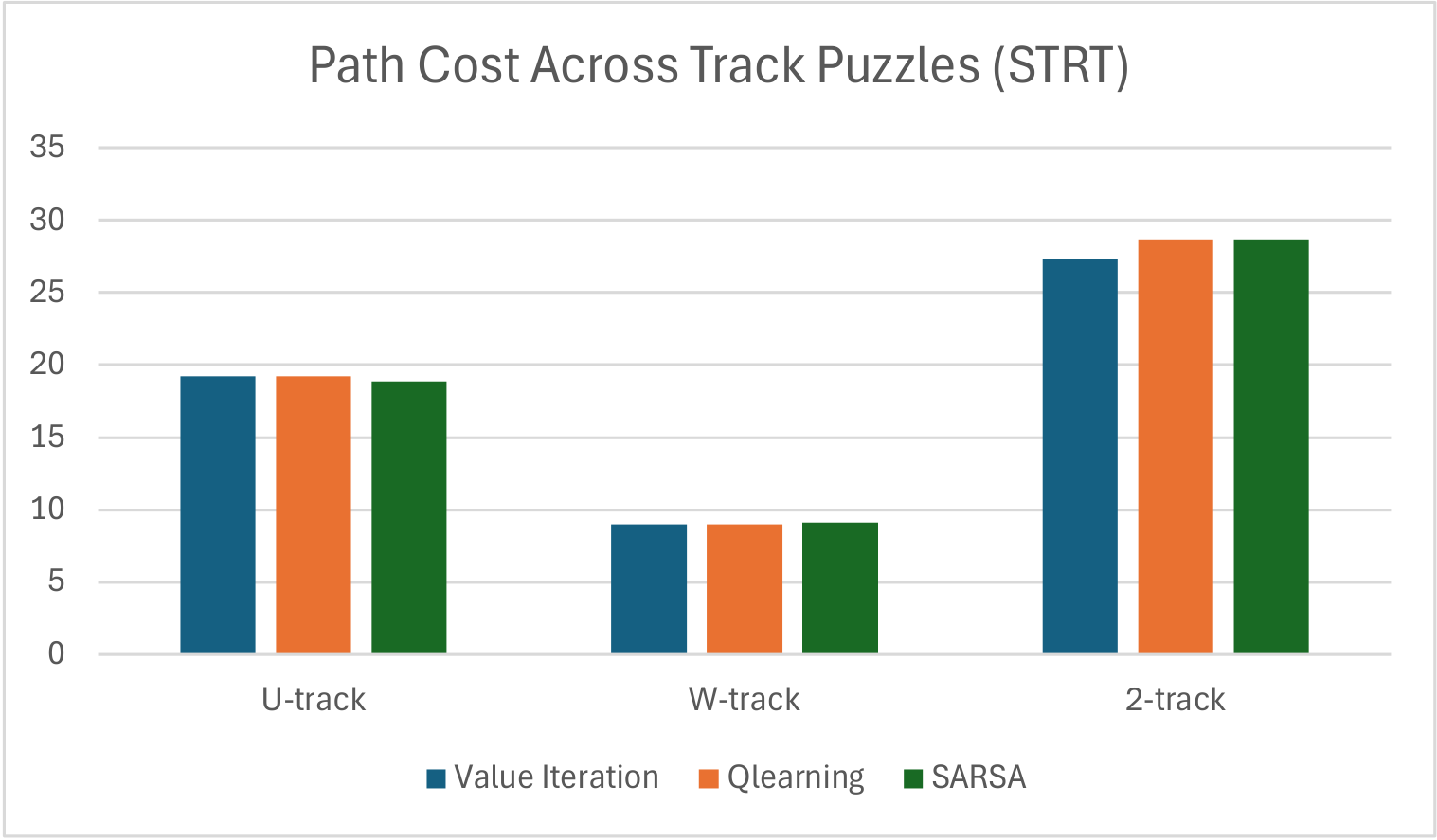

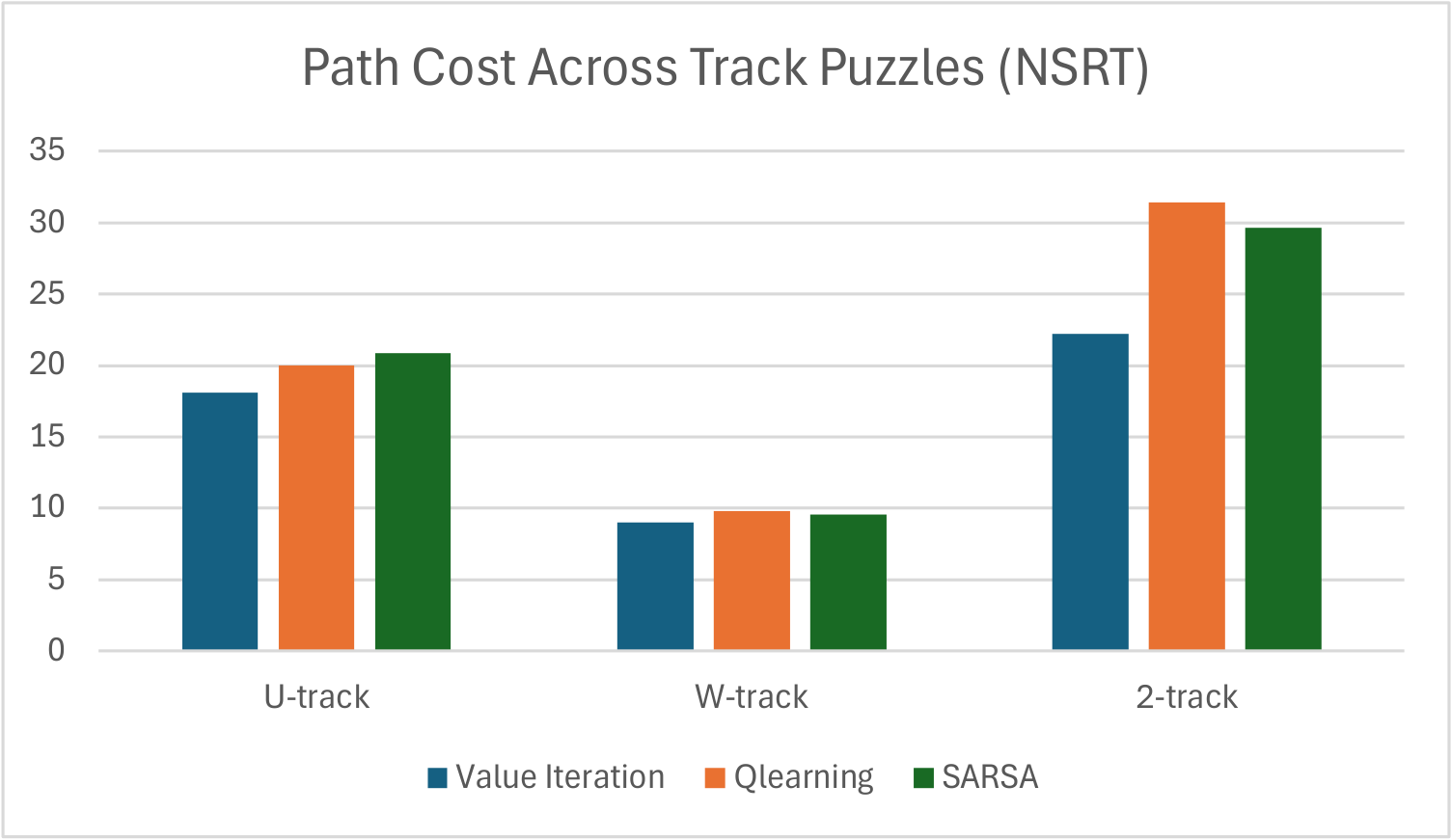

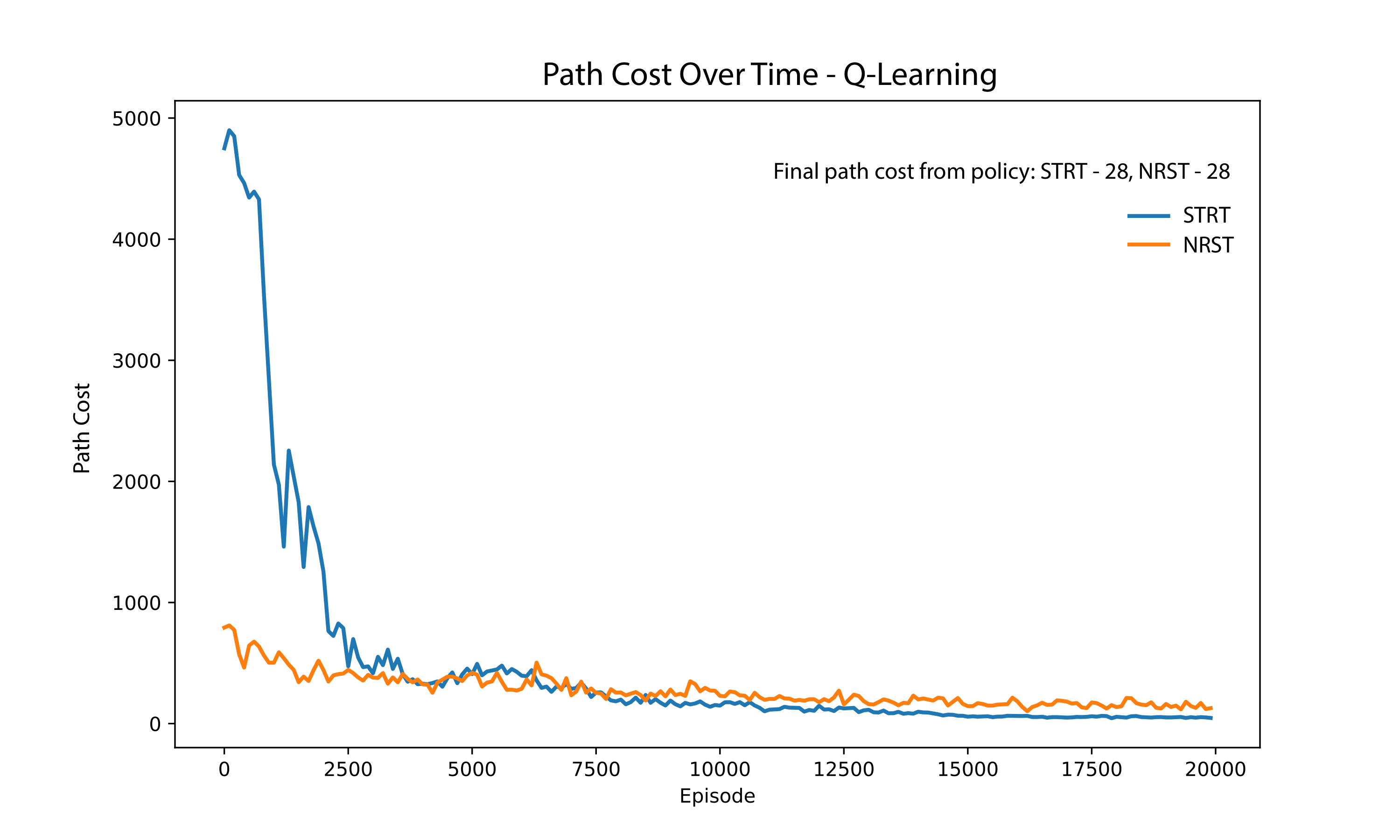

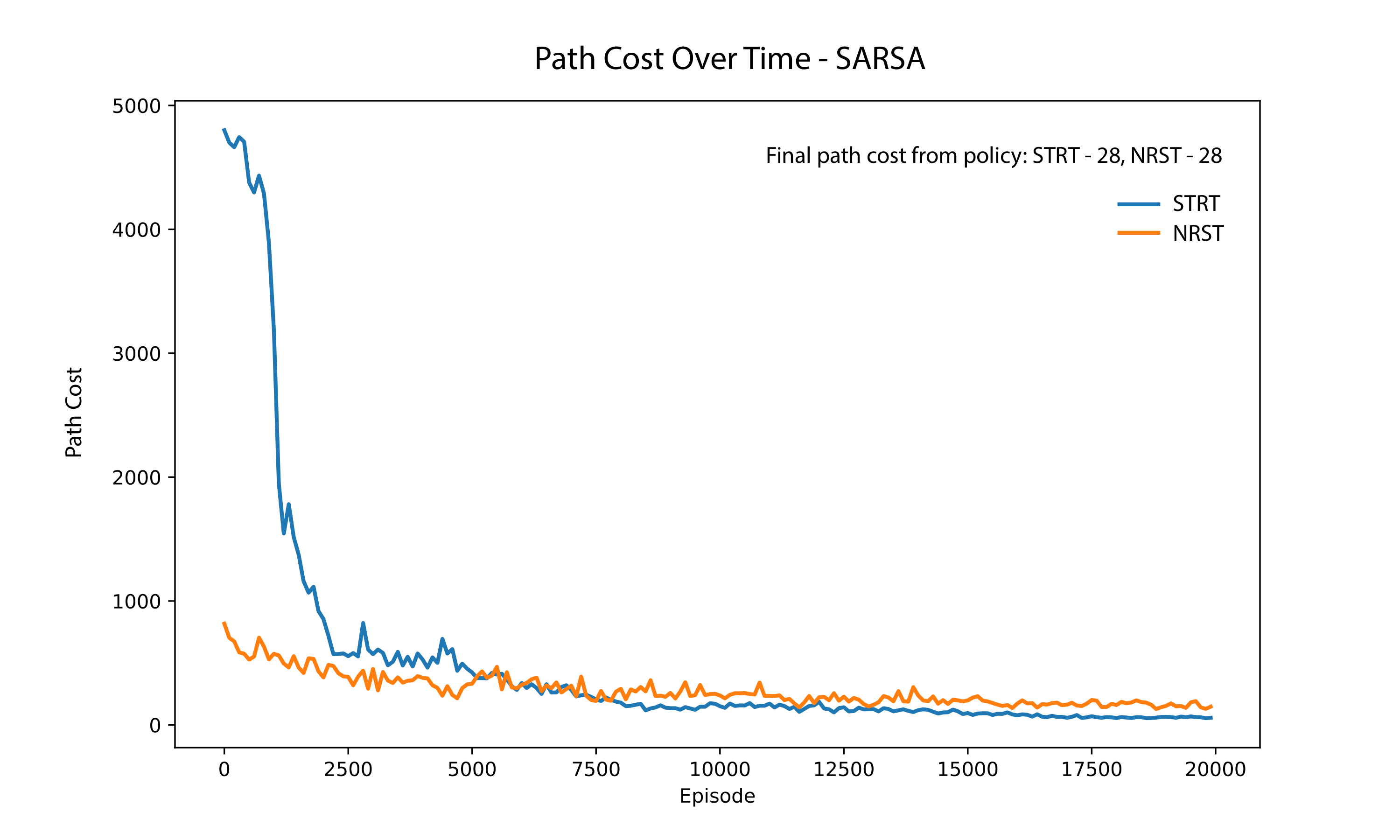

Description: For this project, I worked in a team at Montana State University. We were tasked to implement two model-free reinforcement learning algorithms, Q-Learning and SARSA, as well as one model-based algorithm, Value Iteration. We were asked to implement these algorithms for a grid-based racetrack problem. Once we implemented the algorithms, we compared the performance of each algorithm on two different crash scenarios. In the first scenario, the car would reset to the nearest legal point of the track after a crash and in the second, the car would reset all the way to the starting line. We then compared the the results for the model-free vs. model-based algorithms on all crash scenarios on three different tracks.

Results: It was found that the model-free algorithms performed slightly worse in most cases and that often times the full restart crash scenario would result in a lower path cost which was unexpected.

Technologies: Python, Numpy, Matplotlib, UML, Latex

Note: If you would like to see the full design document, code base, and research paper that goes with this project please feel free to reach out to me by email.