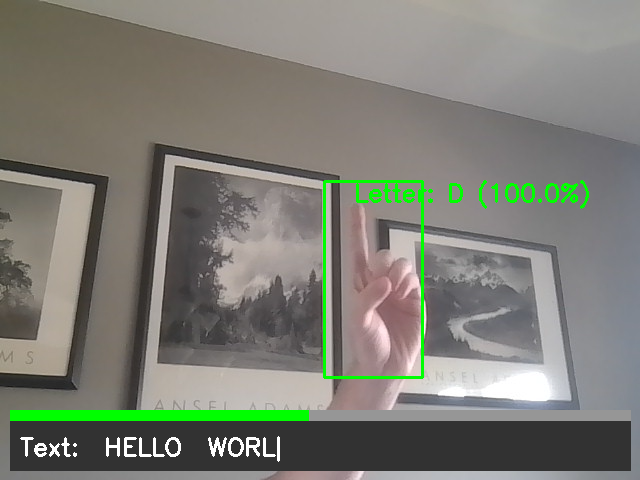

Hand Tracking / ASL Alphabet Detection

Description: For this project I developed a real-time ASL alphabet detection system using computer vision and machine learning techniques. I used MediaPipe to get hand landmarks for tracking. I then created a training dataset with all the hand landmarks as features and then trained a Multi-layer Perceptron Classifier to classify between the different letters of the alphabet. I added a visual feedback interface that would allow users to type out words. This interface includes a confidence measure for each letter as well as a progress bar indicating gesture recognition confidence over time.

Technologies: Python, OpenCV, Tensorflow, MediaPipe, Numpy, Human Computer Interaction

Future Additions: I would eventually like to have the model recognize gestures. This would either require me to add another model such as a LSTM (Long Short-Term Memory) Recurrent Neural Network or a 3D Convolutional Neural Network.