Machine Learning Project 4 - A Comparison of Neural Network Training Methods

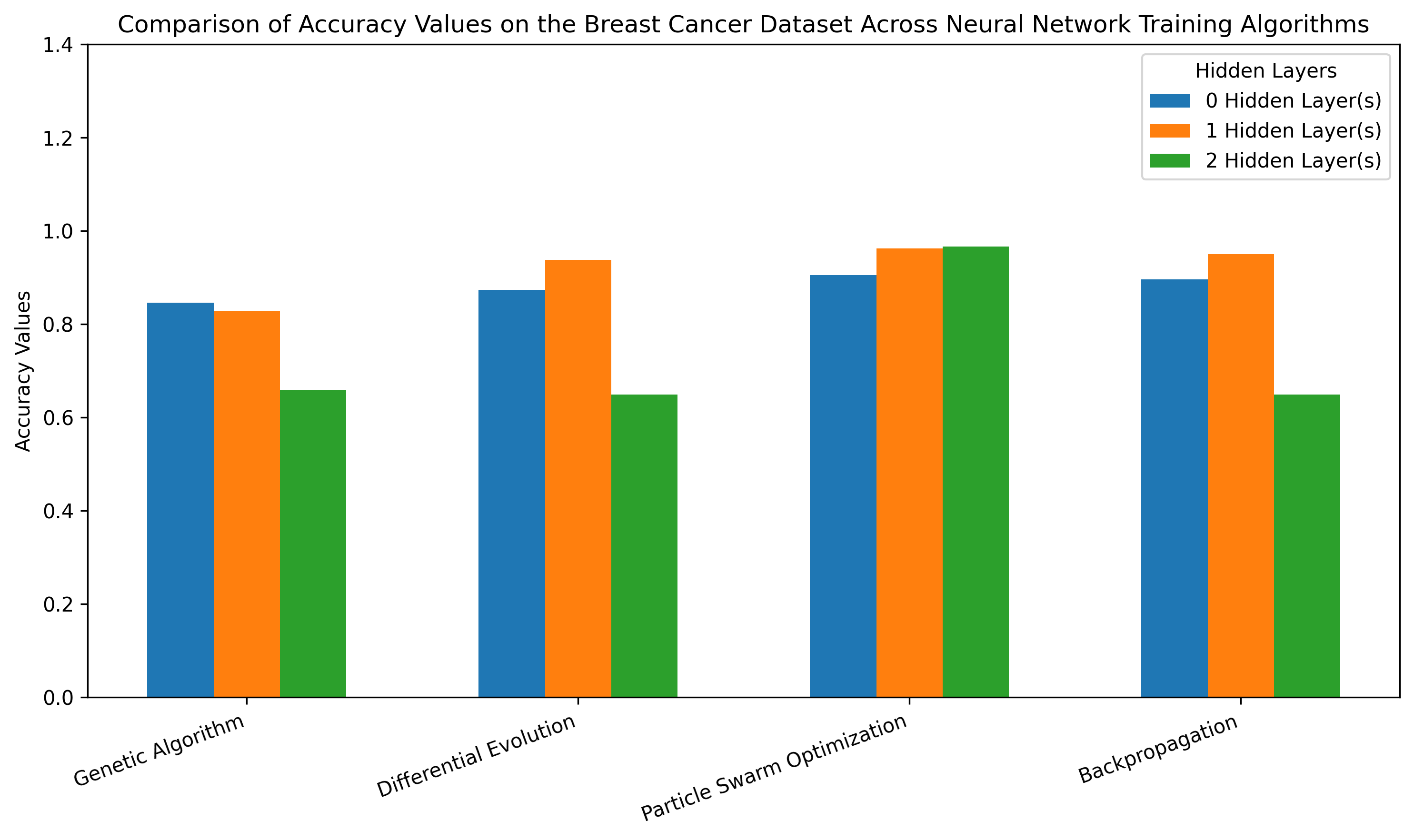

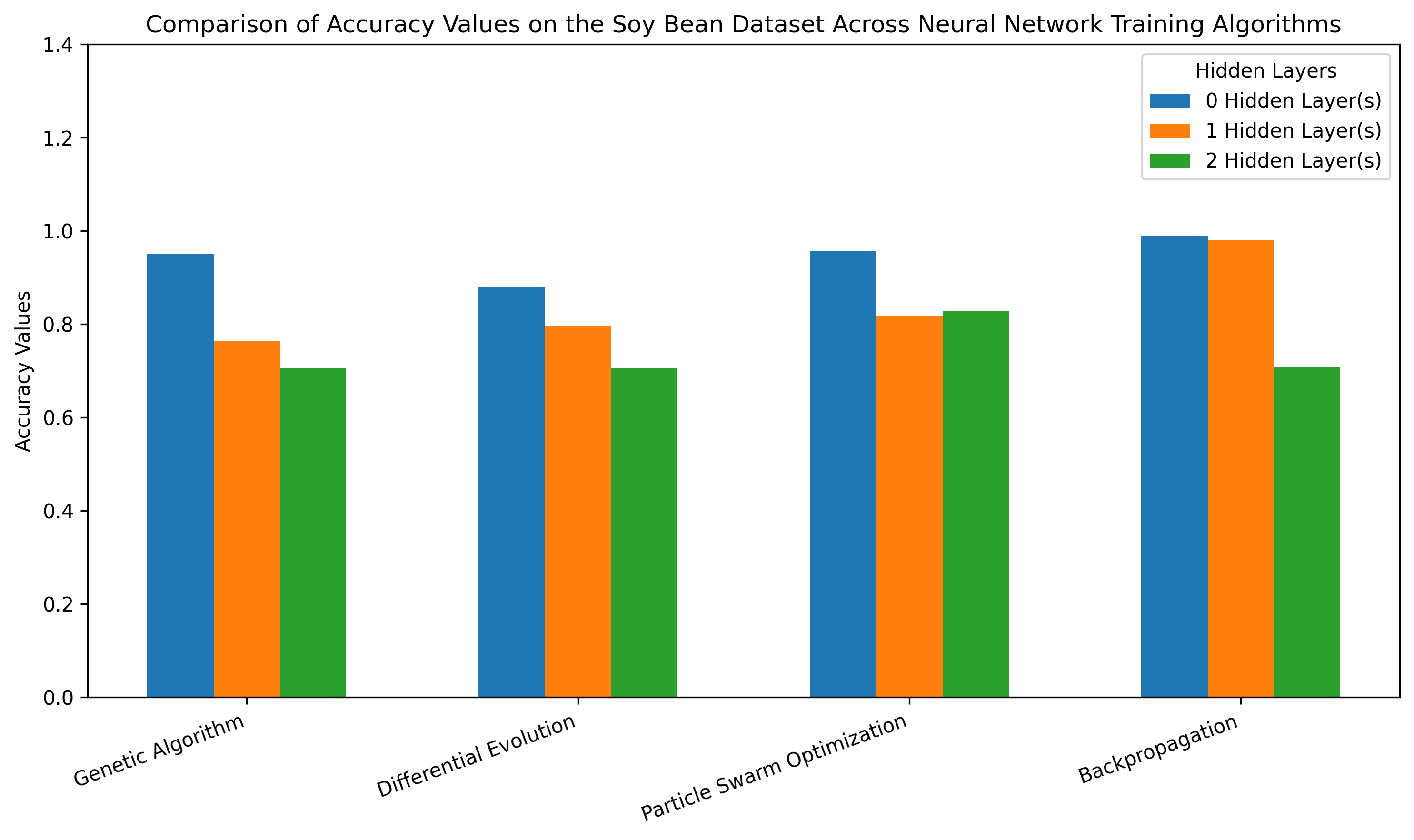

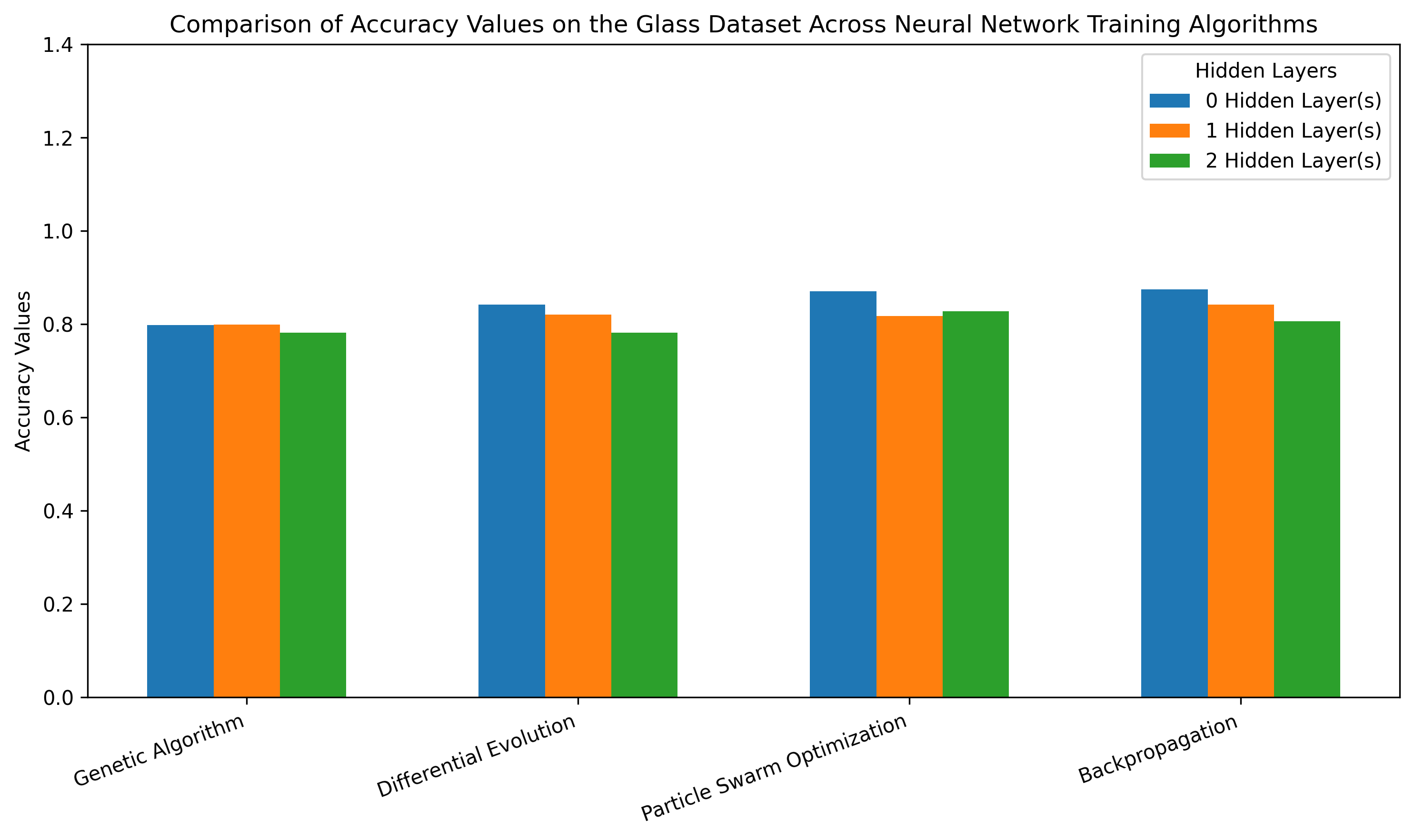

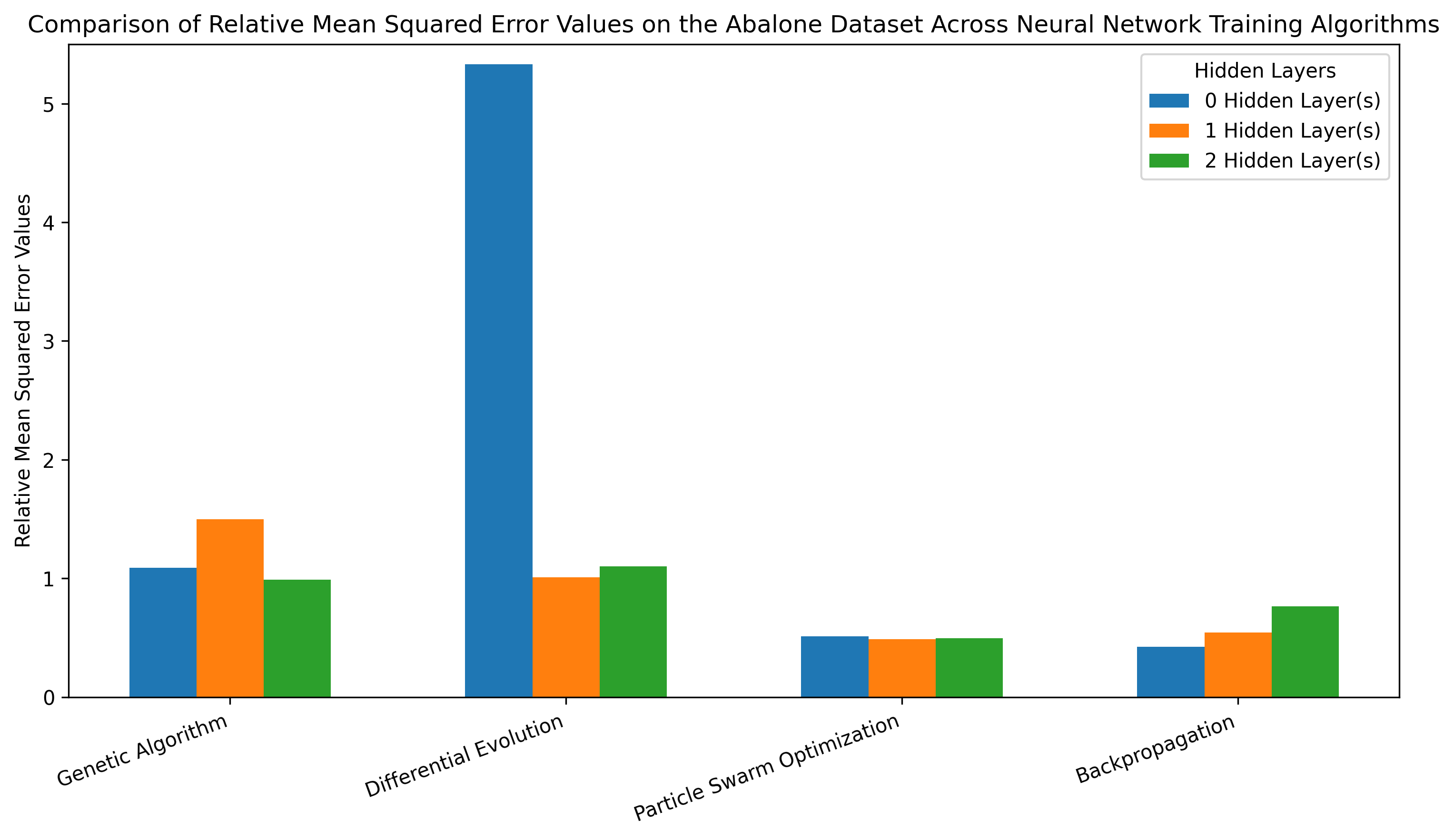

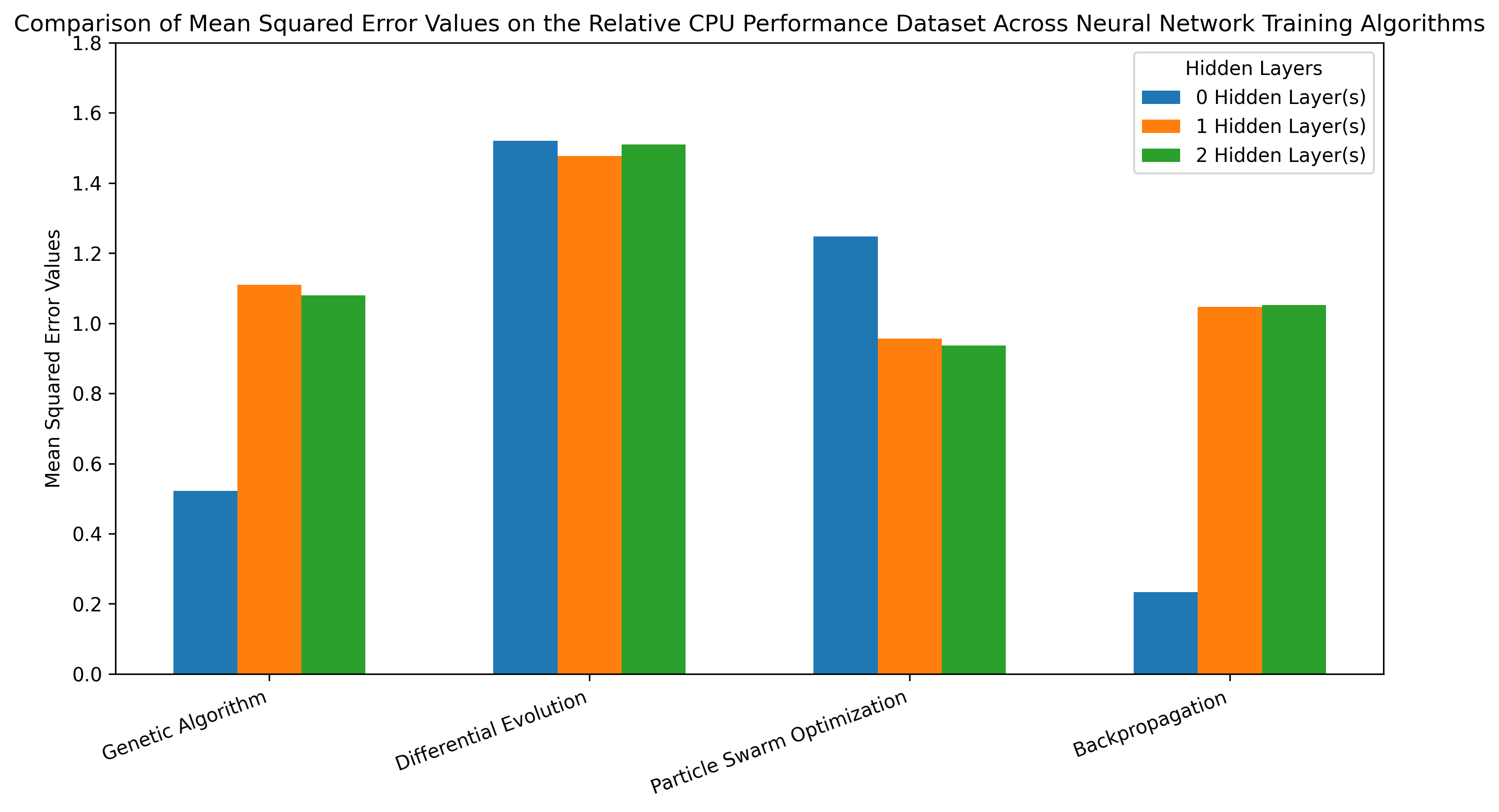

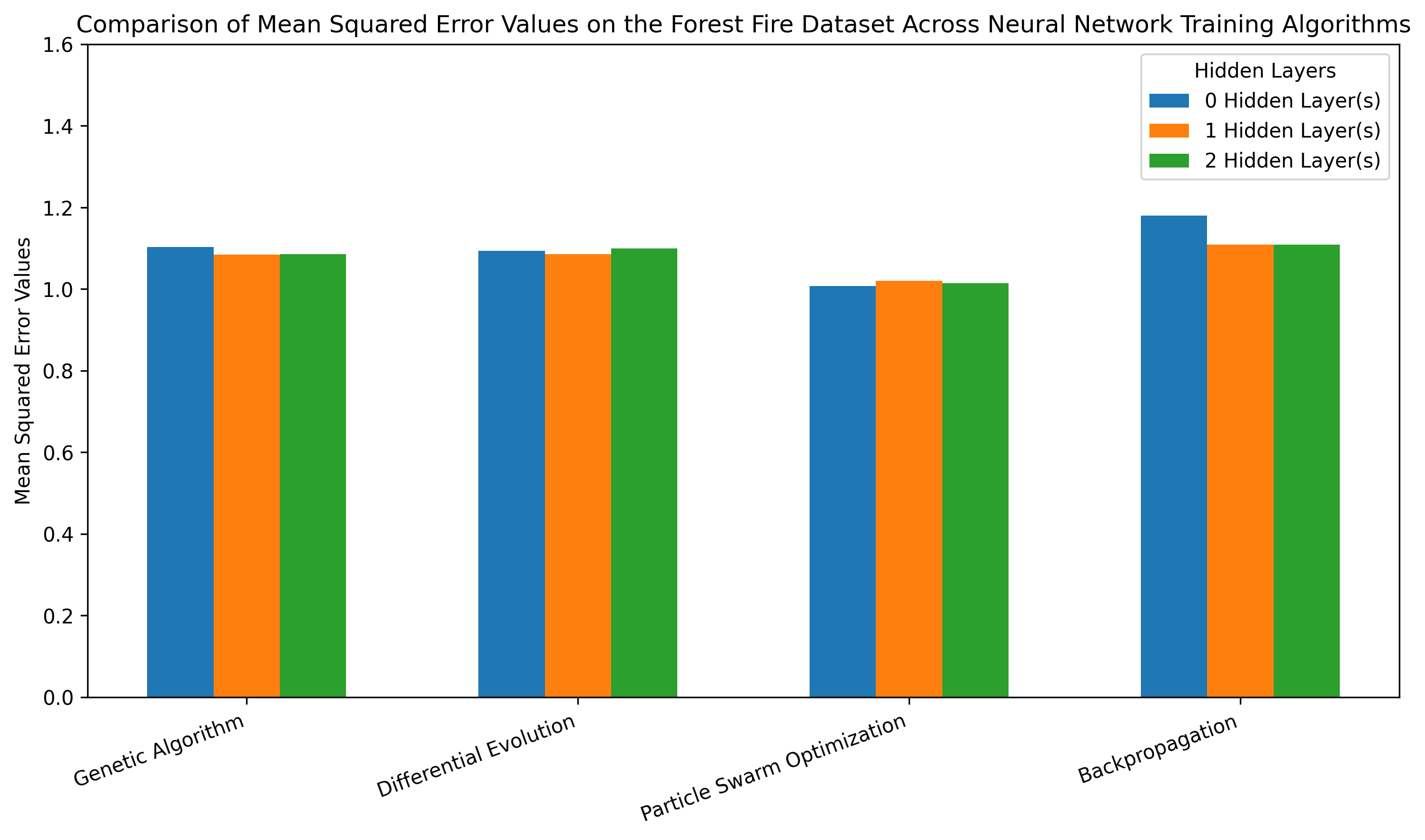

Description: For this project, I worked in a team with two other students at Montana State University. We were tasked with coding multiple different training methods for a neural network. We used the Breast Cancer Wisconsin Dataset, Glass Identification Dataset, Soybean (Small) Dataset, Abalone Dataset, Forest Fires Dataset, and the Computer Hardware Dataset. This project then built on project 3. We used the same network structure that was created in project 3, but this time we intended on testing other training methods. We used backpropagation as our baseline to compare to, then we trained the network by the genetic algorithm, differential evolution, and particle swarm optimization. Because of the complexity of these algorithms and the computational power needed, we only tuned a small amount of the available hyperparameters. Once again we performed 10-fold cross validation on all the datasets and then compared the results across all the training methods.

Results: The performance of the models was really variable. Datasets that tended to overfit with backpropagation would often perform better with population based methods and datasets that were relatively simple would often perform drastically better with backpropagation.

Technologies: Python, Numpy, Matplotlib, UML, Latex

Note: If you would like to see the full design document, code base, and research paper that goes with this project please feel free to reach out to me by email.